Page 66 - Kỷ yếu hội thảo khoa học lần thứ 12 - Công nghệ thông tin và Ứng dụng trong các lĩnh vực (CITA 2023)

P. 66

50 K Y U H I TH O KHOA H C QU C GIA V CNTT VÀ NG D V C L N TH 12

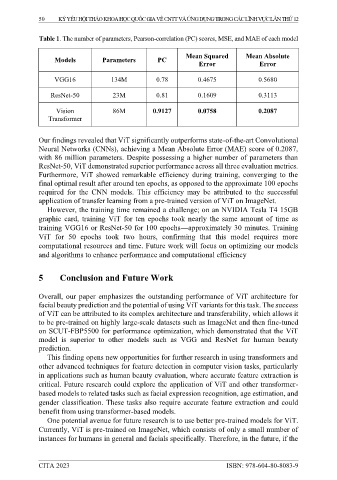

Table 1. The number of parameters, Pearson-correlation (PC) scores, MSE, and MAE of each model

Mean Squared Mean Absolute

Models Parameters PC

Error Error

VGG16 134M 0.78 0.4675 0.5680

ResNet-50 23M 0.81 0.1609 0.3113

Vision 86M 0.9127 0.0758 0.2087

Transformer

Our findings revealed that ViT significantly outperforms state-of-the-art Convolutional

Neural Networks (CNNs), achieving a Mean Absolute Error (MAE) score of 0.2087,

with 86 million parameters. Despite possessing a higher number of parameters than

ResNet-50, ViT demonstrated superior performance across all three evaluation metrics.

Furthermore, ViT showed remarkable efficiency during training, converging to the

final optimal result after around ten epochs, as opposed to the approximate 100 epochs

required for the CNN models. This efficiency may be attributed to the successful

application of transfer learning from a pre-trained version of ViT on ImageNet.

However, the training time remained a challenge; on an NVIDIA Tesla T4 15GB

graphic card, training ViT for ten epochs took nearly the same amount of time as

training VGG16 or ResNet-50 for 100 epochs approximately 30 minutes. Training

ViT for 50 epochs took two hours, confirming that this model requires more

computational resources and time. Future work will focus on optimizing our models

and algorithms to enhance performance and computational efficiency

5 Conclusion and Future Work

Overall, our paper emphasizes the outstanding performance of ViT architecture for

facial beauty prediction and the potential of using ViT variants for this task. The success

of ViT can be attributed to its complex architecture and transferability, which allows it

to be pre-trained on highly large-scale datasets such as ImageNet and then fine-tuned

on SCUT-FBP5500 for performance optimization, which demonstrated that the ViT

model is superior to other models such as VGG and ResNet for human beauty

prediction.

This finding opens new opportunities for further research in using transformers and

other advanced techniques for feature detection in computer vision tasks, particularly

in applications such as human beauty evaluation, where accurate feature extraction is

critical. Future research could explore the application of ViT and other transformer-

based models to related tasks such as facial expression recognition, age estimation, and

gender classification. These tasks also require accurate feature extraction and could

benefit from using transformer-based models.

One potential avenue for future research is to use better pre-trained models for ViT.

Currently, ViT is pre-trained on ImageNet, which consists of only a small number of

instances for humans in general and facials specifically. Therefore, in the future, if the

CITA 2023 ISBN: 978-604-80-8083-9