Page 91 - Kỷ yếu hội thảo khoa học lần thứ 12 - Công nghệ thông tin và Ứng dụng trong các lĩnh vực (CITA 2023)

P. 91

75

Fig. 10. Precision và recall

With a fairly high Precision and Recall measurement, it creates stability in layering

ability of the Yolov7-E6 model but is not too superior to other models.

The model achieves very good results for cases where the input image is of good

quality, with special cases such as only one pistol-point, the model has quality results

received with an accuracy of about 30-80%, specifically in the following cases:

One pixel One partially covered One point Bad condition

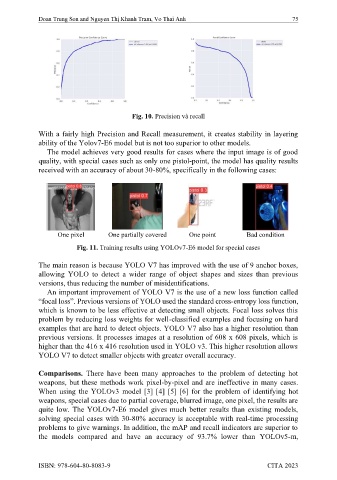

Fig. 11. Training results using YOLOv7-E6 model for special cases

The main reason is because YOLO V7 has improved with the use of 9 anchor boxes,

allowing YOLO to detect a wider range of object shapes and sizes than previous

versions, thus reducing the number of misidentifications.

An important improvement of YOLO V7 is the use of a new loss function called

-entropy loss function,

which is known to be less effective at detecting small objects. Focal loss solves this

problem by reducing loss weights for well-classified examples and focusing on hard

examples that are hard to detect objects. YOLO V7 also has a higher resolution than

previous versions. It processes images at a resolution of 608 x 608 pixels, which is

higher than the 416 x 416 resolution used in YOLO v3. This higher resolution allows

YOLO V7 to detect smaller objects with greater overall accuracy.

Comparisons. There have been many approaches to the problem of detecting hot

weapons, but these methods work pixel-by-pixel and are ineffective in many cases.

When using the YOLOv3 model [3] [4] [5] [6] for the problem of identifying hot

weapons, special cases due to partial coverage, blurred image, one pixel, the results are

quite low. The YOLOv7-E6 model gives much better results than existing models,

solving special cases with 30-80% accuracy is acceptable with real-time processing

problems to give warnings. In addition, the mAP and recall indicators are superior to

the models compared and have an accuracy of 93.7% lower than YOLOv5-m,

ISBN: 978-604-80-8083-9 CITA 2023