Page 201 - Kỷ yếu hội thảo khoa học lần thứ 12 - Công nghệ thông tin và Ứng dụng trong các lĩnh vực (CITA 2023)

P. 201

Cong Tung Dinh, Thu Huong Nguyen, Huyen Do Thi, Nam Anh Bui 185

Each convolutional layer of the model is applied several filters to search for features

in the input image. The ResNet152-V2 model uses the Residual block proposed by

ResNet, which avoids the deterioration of accuracy when the neural network becomes

very deep. The ResNet152-V2 model also uses a bottleneck technique, allowing the

model to learn higher-resolution features without adding depth to the network. This

technique uses small-sized convolutional layers before using large-sized

convolutional layers to reduce calculation costs. The specifics of the models are

described as follows: The input layer is a 224x224x3 image. The convolutional layer

consists of 152 layers divided into different blocks. Each block consists of multiple

convolutional layers and activation layers. The Residual blocks of the ResNet152-V2

Residual network contain a number of building blocks designed to reduce the depth of

the network and reduce the deterioration of accuracy when the network becomes very

deep. Finally, there are trigger functions, pooling layers, and full connection layers.

ResNet152-V2 is an upgraded version of ResNet152, with improvements in

architecture and training methods to achieve greater accuracy. It has 152 layers and

8.0 million parameters, trained on an ImageNet dataset of 1.28 million images. For

the dataset in the paper, the prediction ResNet152-V2 model has an accuracy of

95.53%

3 Dataset

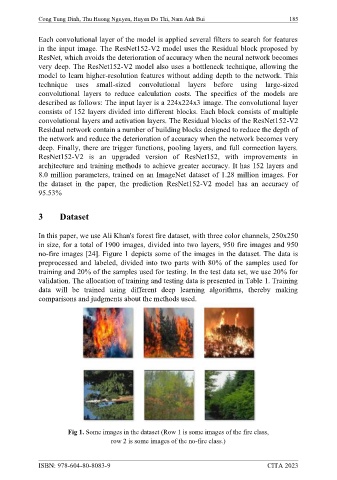

In this paper, we use Ali Khan's forest fire dataset, with three color channels, 250x250

in size, for a total of 1900 images, divided into two layers, 950 fire images and 950

no-fire images [24]. Figure 1 depicts some of the images in the dataset. The data is

preprocessed and labeled, divided into two parts with 80% of the samples used for

training and 20% of the samples used for testing. In the test data set, we use 20% for

validation. The allocation of training and testing data is presented in Table 1. Training

data will be trained using different deep learning algorithms, thereby making

comparisons and judgments about the methods used.

Fig 1. Some images in the dataset (Row 1 is some images of the fire class,

row 2 is some images of the no-fire class.)

ISBN: 978-604-80-8083-9 CITA 2023